Quickly generate Sitemap and provide it to Google Baidu to speed up inclusion

view my personal blog original article for more:

https://alvincr.com/2021/01/sitemap/

0 Basic knowledge

1 The difference between different formats of sitemaps

The xml format and txt format are for search engines , while the html format is for users .

Site schema format Difference description html format 1. The html format is convenient for users to quickly find the pages inside the website. 2. Flatten the structure of the website. 3. Provide search engine spiders with crawling pages. xml format 1. The site map format supported by each search engine webmaster platform can be submitted directly through the webmaster platform 2. Provide a variety of data, such as Priority (priority), Lastmod (final modification time) and other data. It is convenient for search engine spiders to judge, and it is also the most friendly form comprehensively. txt format 1. The data is concise and the loading speed is fast. 2. Ping submission can be done quickly in Google Compared with html format, xml format and txt format are smaller in size under the same data volume (many of them are only tens of KB). Search engines do not need to load many resources when reading, and the crawling speed will be faster.

On the contrary, the html format is for users to see . The visual effect from the user’s point of view and the speed at which users find the corresponding page are much faster than xml and txt.

1 reason

My personal article was stolen without permission, but the original article on my site was not included by Google and Baidu…

2 Manually add

1 Generate sitemap sitemap

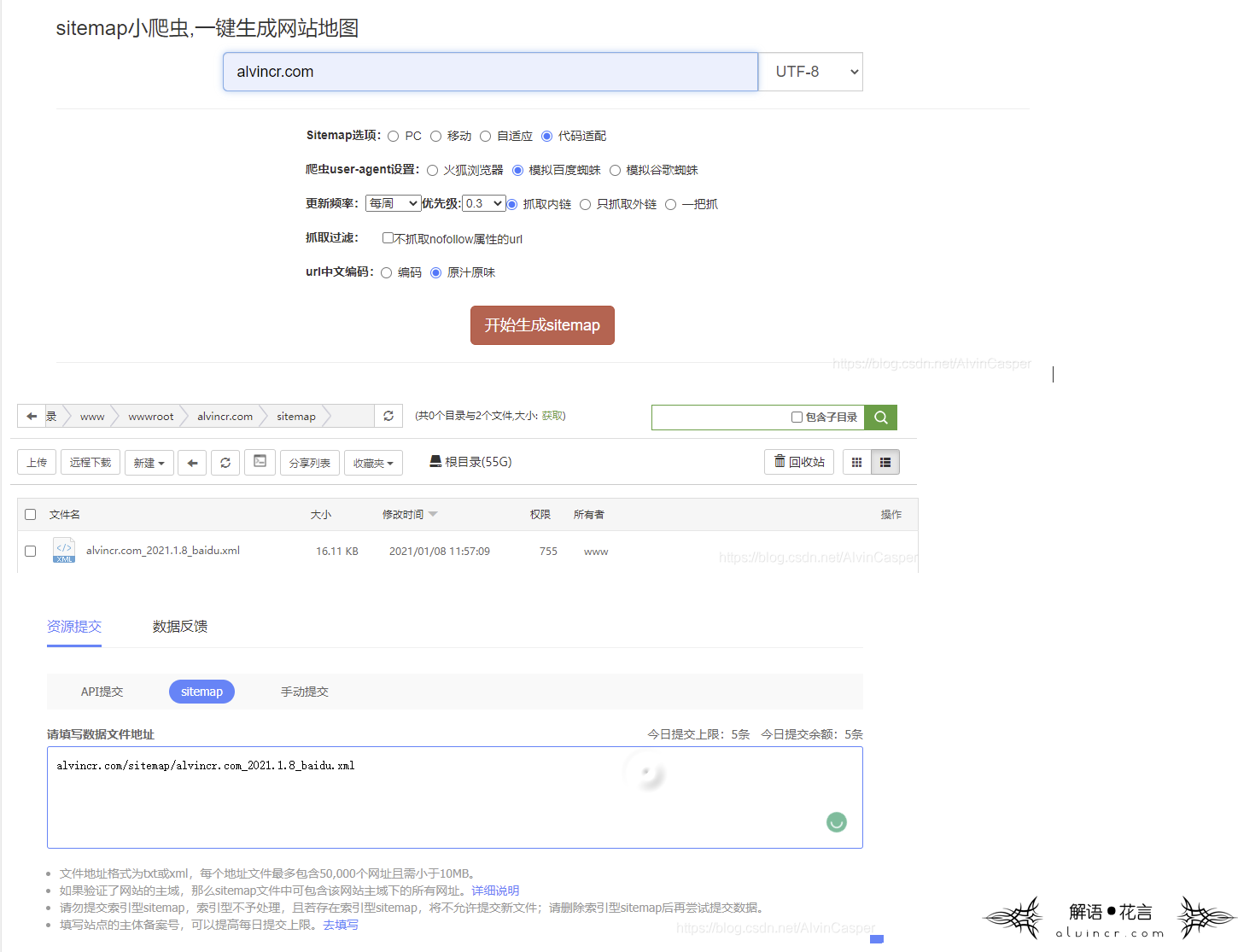

Here I used the following tools to automatically generate a site map:

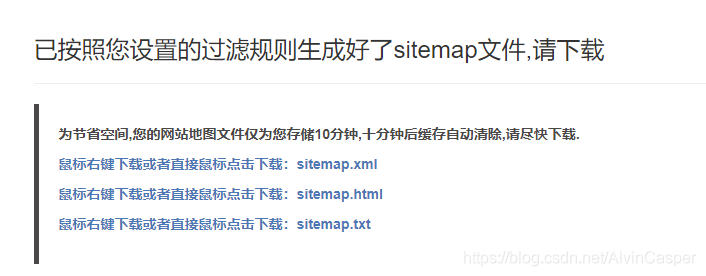

http://tools.bugscaner.com/sitemapspider

First generate a site map suitable for Google. Alvincr’s personal settings are as follows. Alvincr believes that it is best for Baidu and Google to create site maps separately.

Download the sitemap in xml format after generation. The txt format is too old and it is best not to use it.

2 Place the site map

``Create a directory under alvincr.com, what I created here is the sitemap folder, upload the downloaded files to the folder, and then rename it to alvincr.com_2021.1.8.xml

3 Provide sitemap

The original translation of alvincr.com is the official Google document: https://developers.google.com/search/docs/advanced/sitemaps/build-sitemap?hl=zh-cn#addsitemap

Google does not check the sitemap every time it crawls a website. We only view the site map when we first discover it; then only when you use the ping function to notify us of changes to the site map. Please only send sitemap-related reminders to Google when creating or updating a sitemap. If there are no changes to the sitemap, please do not submit or ping the sitemap to us multiple times.

There are several different ways you can provide sitemaps to Google:

Insert the following line

robots.txtanywhere in the file to specify the path to your site map.Sitemap: http://example.com/sitemap_location.xmlUse the “ping” function to request us to crawl the site map. Send an HTTP GET request like the following: For example:

http://www.google.com/ping?sitemap=<*complete_url_of_sitemap*>

http://www.google.com/ping?sitemap=https://example.com/sitemap.xml

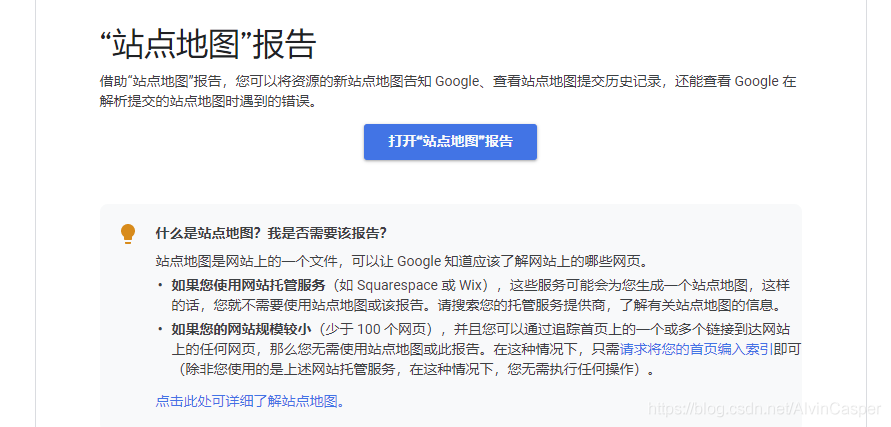

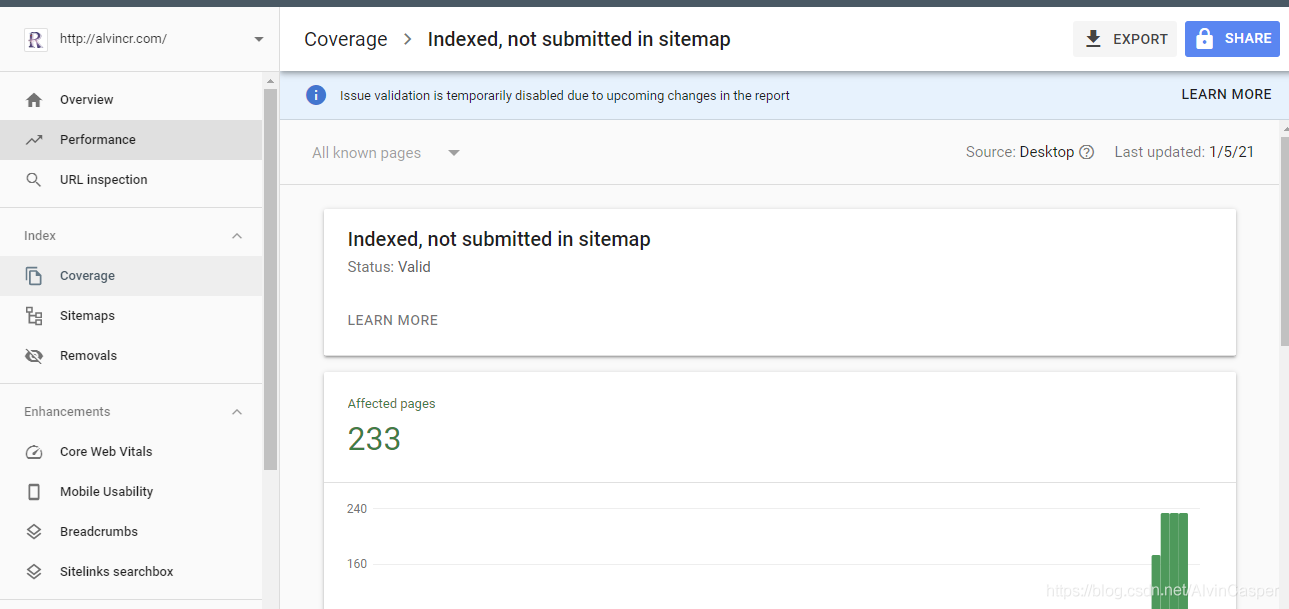

Method 1: Use the Search Console sitemap

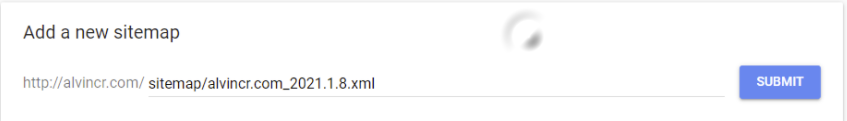

Open the site map report and select the alvincr.com website in search property in the upper right corner.

Add the address of the server where the sitemap is located. According to the above operation, I put it in the sitemap folder and named it alvincr.com_2021.1.8.xml. Do not add / in front of the sitemap, Google adds it by default

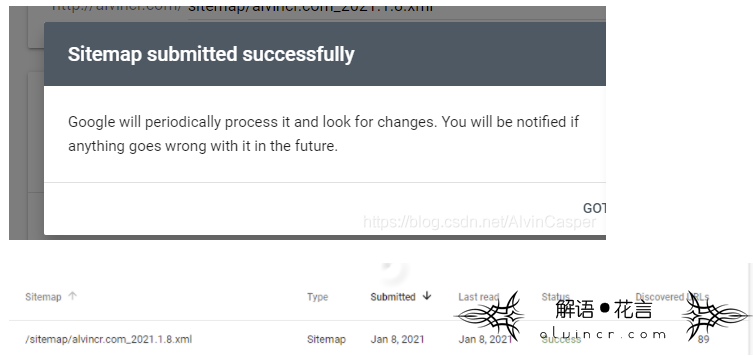

Add successfully display interface:

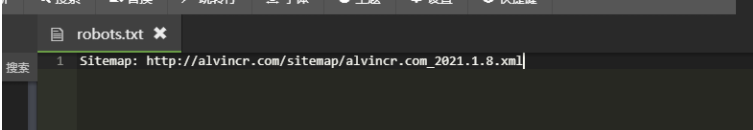

Method 2: Use robots

robots.txt (uniform lowercase) is an ASCII- encoded text file stored in the root directory of a website . It usually tells the bots of web search engines (also known as web spiders ) which content on this website should not be searched Which ones can be obtained by the bot of the engine? Because URLs in some systems are case sensitive, the file name of robots.txt should be unified in lower case. robots.txt should be placed in the root directory of the website. If you want to individually define the behavior of search engine robots when accessing subdirectories, you can merge your customized settings into robots.txt in the root directory, or use robots metadata (Metadata, also known as metadata).

I remember that I have written an article about robots. You can use the search function to search, but I deleted the original robot file when I was dealing with the problem. Here I need to recreate a blank file and add the following code:

Sitemap: http://alvincr.com/sitemap/alvincr.com_2021.1.8.xml

Method 3: Use the ping function, I think this is quite convenient

http://www.google.com/ping?sitemap=:http://alvincr.com/sitemap/alvincr.com_2021.1.8.xml

``

2021.1.8 supplement:

Do not use the above method to ping the site map, it is best to ping the newly created url link.

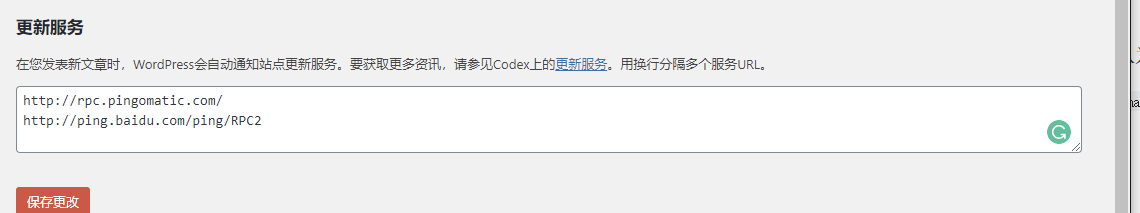

Method 4: 2021.1.8 supplement

Add update service in wp background-settings-compose, for example, Baidu’s update is http://ping.baidu.com/ping/RPC2

3 Automatically added

Use the seo plug-in to follow the relevant settings, basically no operation is required, seo will automatically add a site map by default.

All in one SEO personally feels that the effect is not good. After all, it seems that I haven’t seen it for so long. Maybe it is a problem with my settings.

New Search Console (Google official tool)

4 Attachment: Submitted by Baidu

Address: https://ziyuan.baidu.com/linksubmit/index

Obtain Baidu’s sitemap file as described above, put it on the server and rename it.

Disclaimer: The author and ownership of this article belong to alvincr, address: alvincr.com